Category: Product

-

Owning the Problem and the Decision you didn’t make- as a Product Manager

If you want to be seen as the owner of that product area, it comes with taking ownership of all the decisions of the past, good, bad and the stupid. Here is how to do it

-

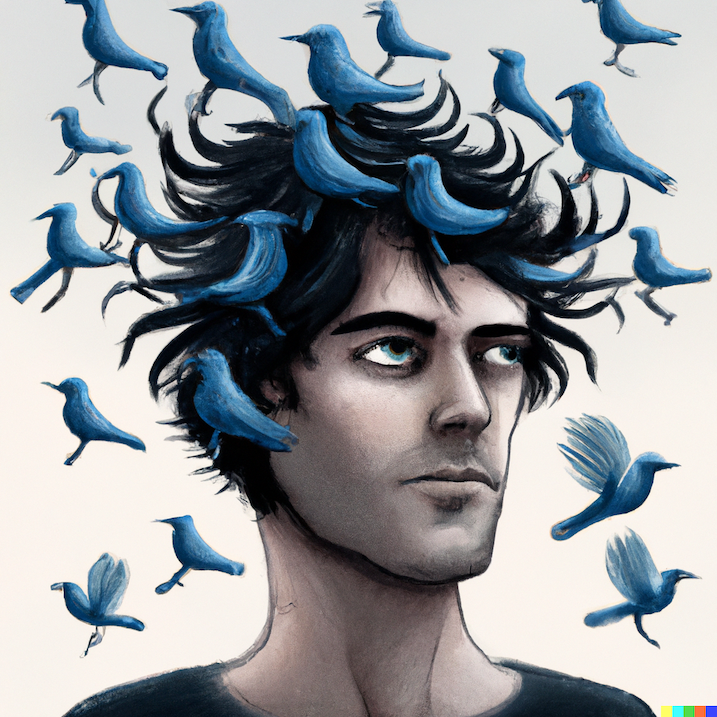

Stopping Thinking in Tweets

I used to love twitter, maybe too much.Ps: Now I am on Threads One of the reasons was not just because it seemed like a great place for content consumption but also a great place for content publishing. You could express yourself in short tweets or tweet threads, you could get instant feedback, you could…

-

Twitter Blue and the credibility crisis

As some of you already know Twitter was perhaps my most favorite social media website. I loved using Twitter, and was, and still am very active (Though reducing) I was excited when musk said that he wanted to buy the platform, but looking at all his shenanigans from trying to walk out of the deal…

-

Right to Not train- Written by Human

Can AR rehman block you from being inspired by his music, can James cameron prevent you from making a movie that uses the narative structure of avatar? All Art is stolen, all innovation is a copy, we all stand on the shoulders of the giants But what if we are the last giants standing .…

-

AI is our Generation Gap

You will feel old soon. Remember the generation gap you feel when you try to explain tech to your parent? Well #generativeAI will be our generation’s generation gap Eventually only a few of our generation will get the full potential.But our kids will be born in it, shaped by it Like computers took our parents generating by…

-

Measuring Impact of small changes to the product

How do you measure the effect of the small changes you are doing to your product

-

There is nothing shady about Twitter’s mDAU metric

mDAU is not really that non standard, nor is it specifically bad , nor does it seem twitter’s revealed methodology is anything shady.

-

No twitter did not say only 5% of users are SPAM

Media deliberately conflates mDAU with DAU but the difference is very important